Context: Reproducing game bugs, in our case crash bugs in continuously evolving games like Minecraft, is a notoriously manual, time-consuming, and challenging process to automate. Despite the success of LLM-driven bug reproduction in other software domains, games, with their complex interactive environments, remain largely unaddressed.

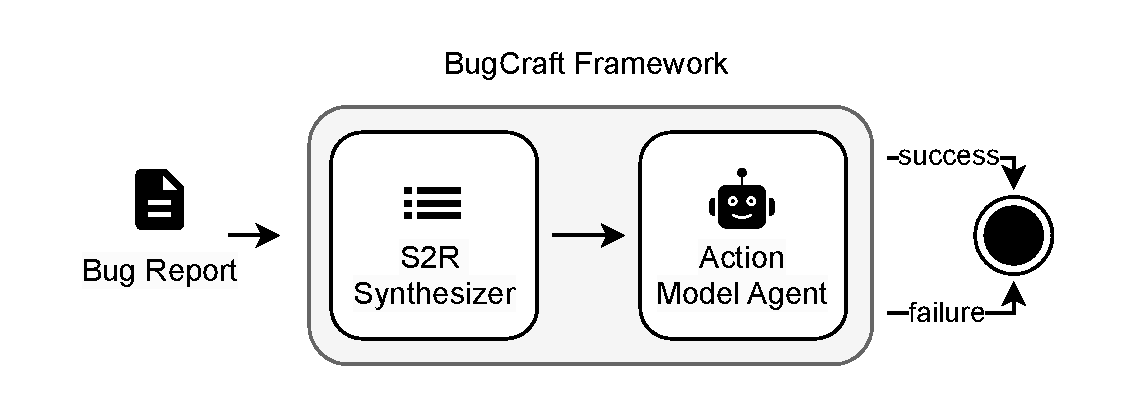

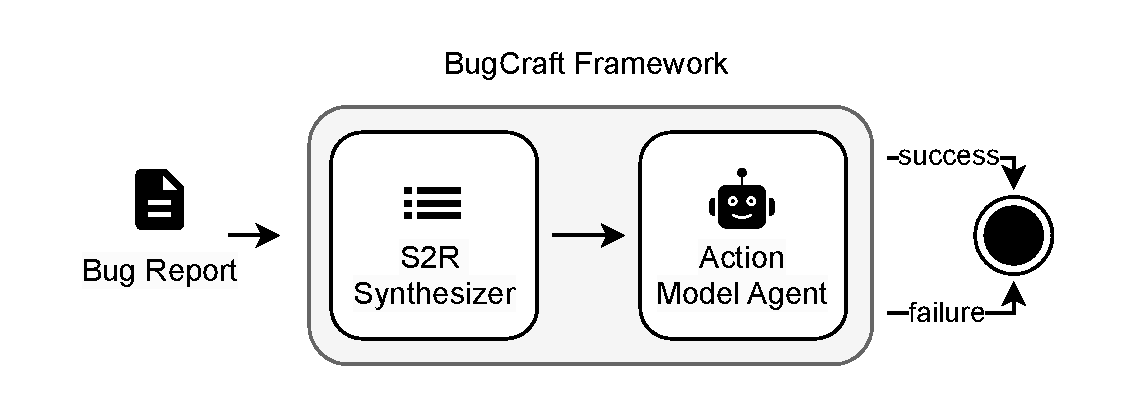

Objective: This paper introduces BugCraft, a novel end-to-end framework designed to automate the reproduction of crash bugs in Minecraft directly from user-submitted bug reports, addressing the critical gap in automated game bug reproduction.

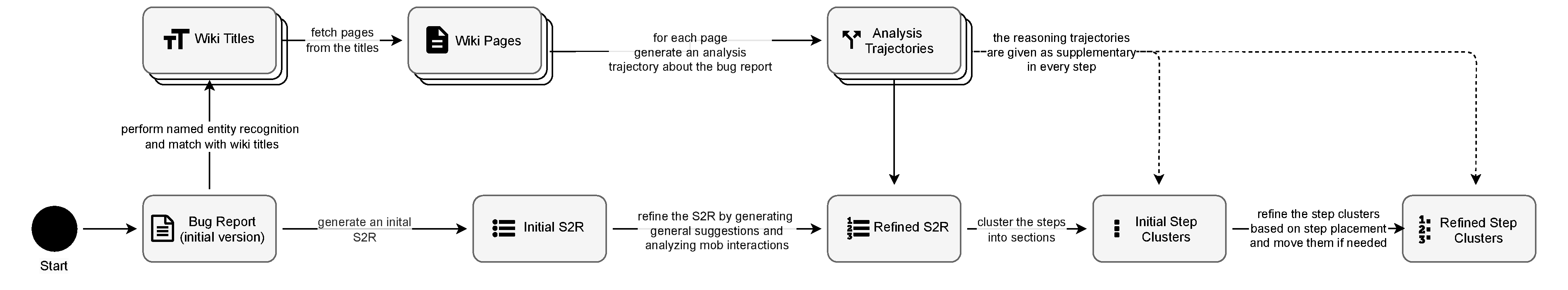

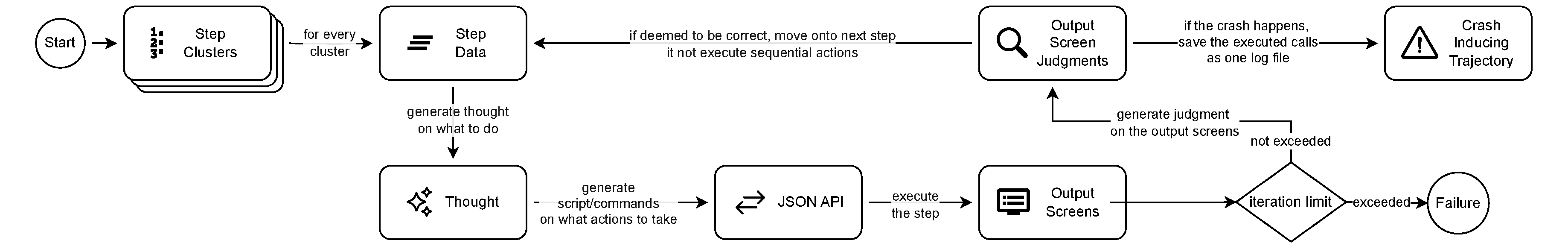

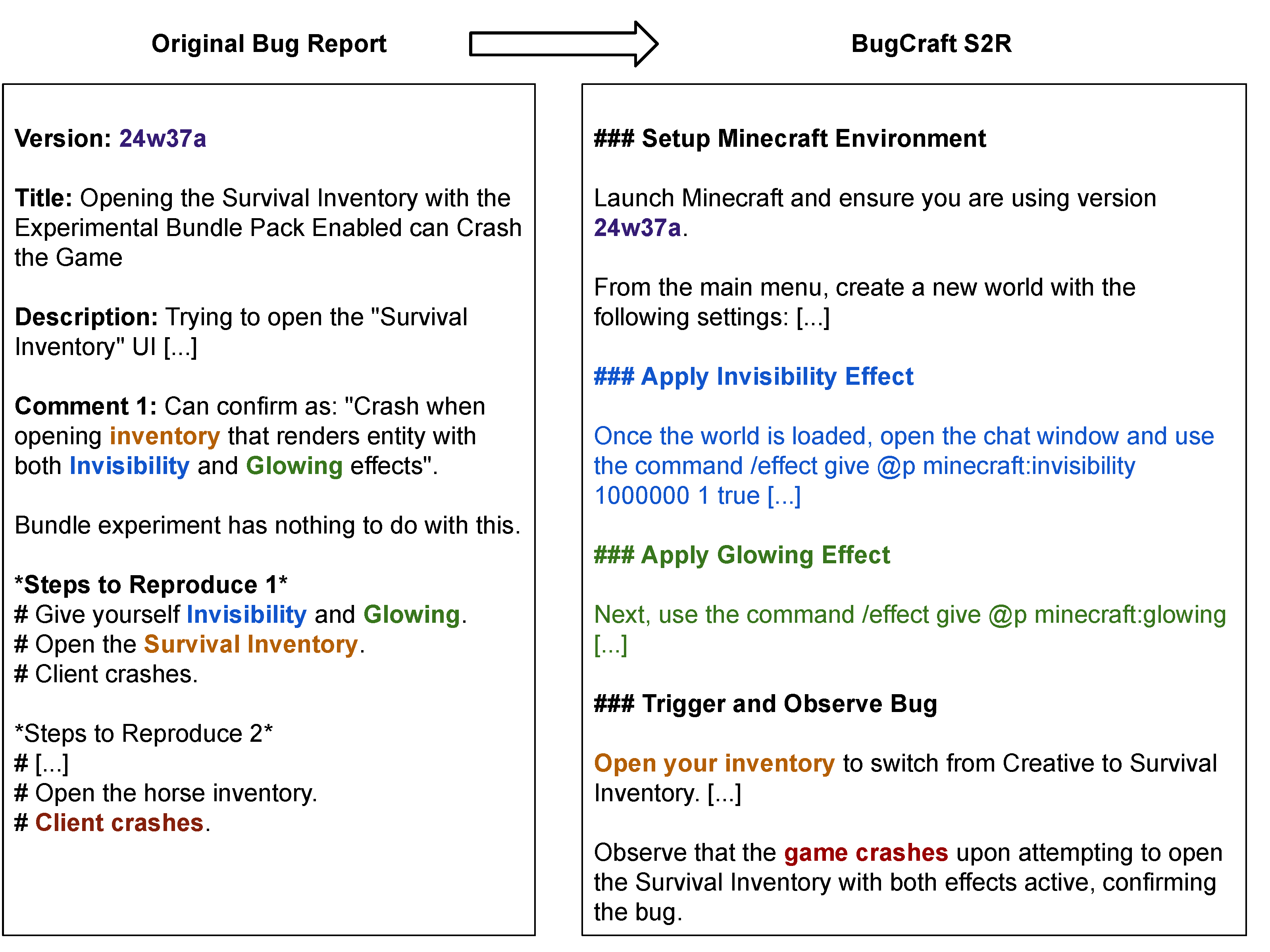

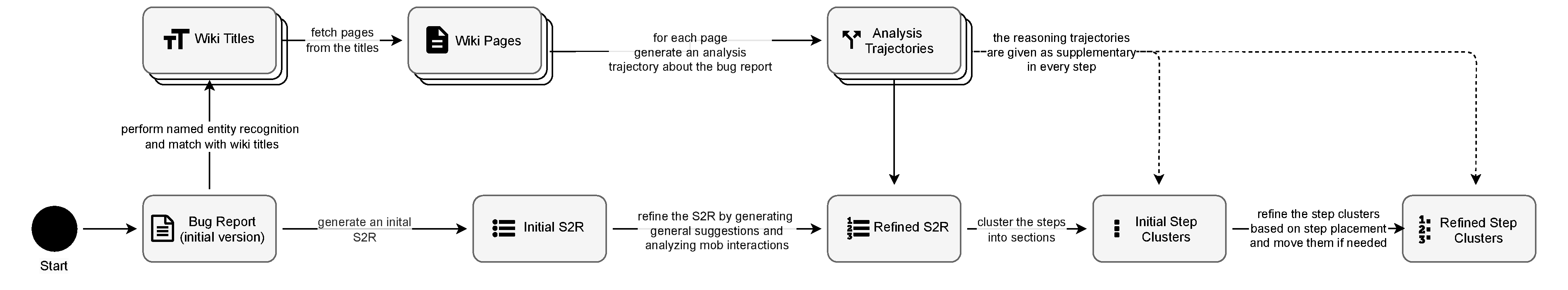

Method: BugCraft employs a two-stage approach: first, a Step Synthesizer leverages LLMs and Minecraft Wiki knowledge to transform bug reports into high-quality, structured steps to reproduce (S2R). Second, an Action Model, powered by vision-based LLM agents (GPT-4o and GPT-4.1) and a custom macro API, executes these S2R steps within Minecraft to trigger the reported crash.

Results: Evaluated on BugCraft-Bench, our framework with GPT-4.1 successfully reproduced 34.9% of crash bugs end-to-end, outperforming baseline models by 37%. The Step Synthesizer demonstrated a 66.28% accuracy in generating correct bug reproduction plans, highlighting its effectiveness in interpreting and structuring bug report information.

Figure 1: Overview of the BugCraft framework

Figure 2: The BugCraft framework, illustrating the two-stage process of S2R synthesis and action model execution.